How to Create AI Agents using Claude Agent SDK (A Step by Step Guide)

Artificial intelligence agents are transforming how we automate complex workflows, perform multi-step tasks, and interact with data and external services.

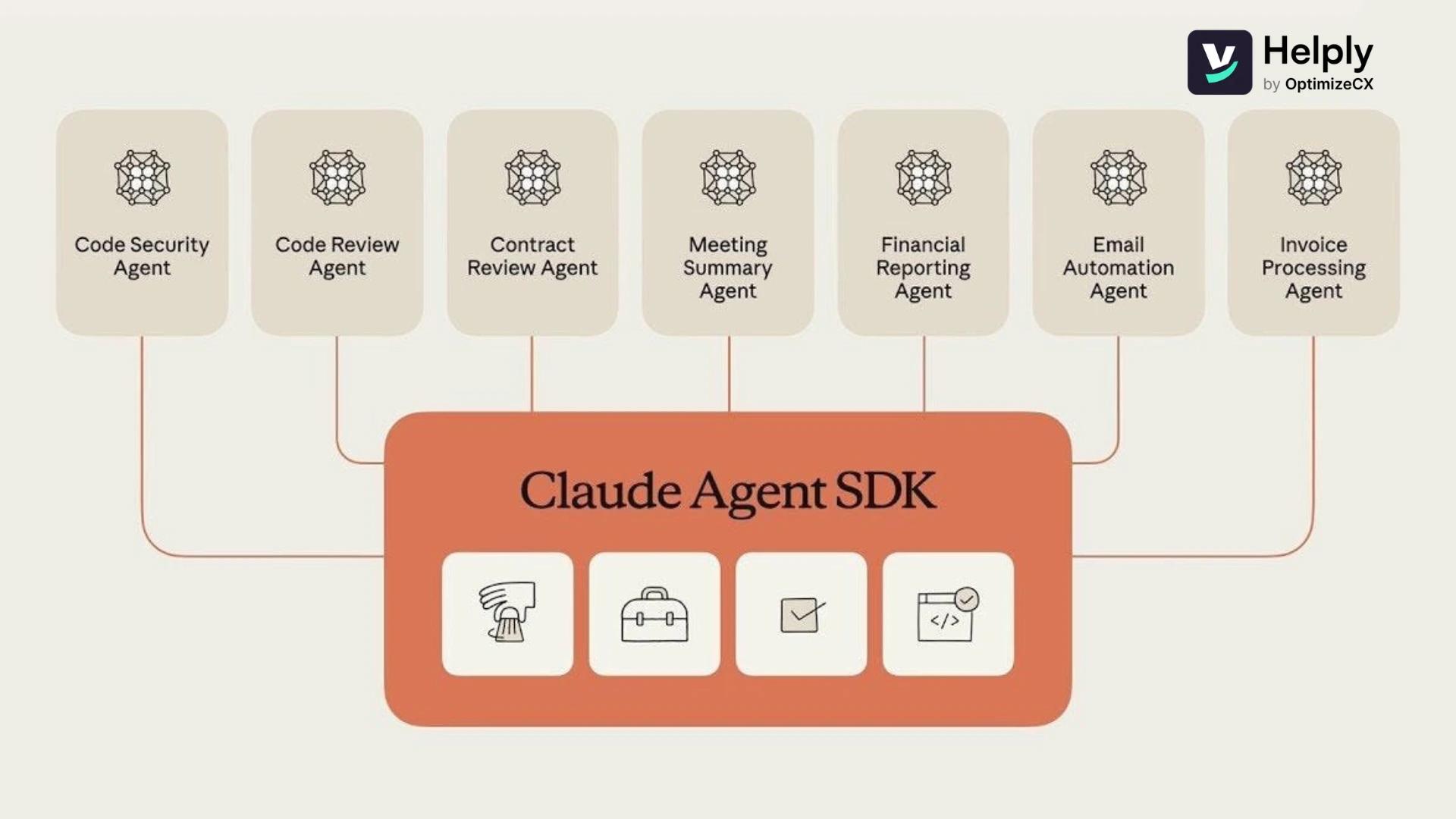

Among the leading tools empowering developers to build such AI agents is the Claude Agent SDK from Anthropic.

This powerful SDK enables you to create reliable, production-ready AI agents that leverage the state-of-the-art Anthropic AI Claude models, including Claude Code and Claude Opus, to perform sophisticated code execution, file operations, semantic search, and more. The Claude Agent SDK provides all the building blocks needed to build production-ready agents.

In this guide, we’ll walk you through everything you need to know about the Claude Agent SDK—from installation and setup to building specialized agents with custom tools and managing context effectively.

Whether you want to build general-purpose agents or highly specialized AI assistants, this step-by-step tutorial will help you harness the full potential of the agent SDK to build agents that are both powerful and safe.

What is the Claude Agent SDK, and How Does it Work?

The Claude Agent SDK is a developer toolkit designed to build interactive AI agents powered by Anthropic’s aligned frontier models, such as Claude Code and Claude Opus.

These AI agents can autonomously perform tasks by gathering context, executing code, interacting with external APIs, managing filesystems, and more—all while following a structured loop of reasoning and action.

The key design principle behind the Claude Agent SDK is to give agents access to a computer. Agents run in a sandboxed container to enhance security and isolate processes, preventing prompt injection and other risks. The SDK supports subagents by default, enabling parallelization of tasks.

At its core, the SDK enables you to:

- Define an agent’s role and behavior through system prompts and configuration.

- Use built-in or custom tools to extend your agent’s capabilities.

- Manage context efficiently with automatic prompt caching and session management.

- Execute multi-step tasks with streaming interaction and built-in error handling.

- Safely operate with permission modes that block dangerous commands and require human approvals.

- Utilize checkpoints that automatically save an agent's progress, allowing for instant rollback during development.

The SDK works by connecting your code to Anthropic’s Claude API via your Claude API key, allowing you to build production-ready agents that can be embedded in Python or TypeScript applications.

How to Create an AI Agent with Claude Agent SDK

Creating an AI agent using the Claude Agent SDK involves several key steps, from setup to deployment. They include:

Step #1. Set up prerequisites

- Choose a language. The SDK is available for TypeScript (Node.js/web) and Python. Pick whichever fits your stack.

- Install system dependencies. Ensure you have:

- Python ≥ 3.10 and Node ≥ 18 (for the CLI tools).

- An Anthropic API key from the Claude Console. Set it in the environment as ANTHROPIC_API_KEY. If you use Bedrock or Vertex AI, set the appropriate environment variables (CLAUDE_CODE_USE_BEDROCK or CLAUDE_CODE_USE_VERTEX).

- Install the CLI and SDK:

- Install the Claude Code/Agent CLI globally: npm install -g @anthropic-ai/claude-code or run the installer script (curl -fsSL https://claude.ai/install.sh | bash).

- Install the Agent SDK library: pip install claude-code-sdk (Python) or npm install @anthropic-ai/claude-agent-sdk (TypeScript).

- Verify installation. Run claude doctor to ensure the CLI works, and test a simple streaming query to confirm your SDK install (e.g., query “What is 2+2?”).

Step #2. Understand the agent loop

The Claude Agent SDK follows a structured loop of gathering context → taking action → verifying work → repeating. The SDK includes built-in features for error handling, session management, and monitoring to support production-ready applications.

Agents use tools to fetch context (files, APIs), perform actions (running code, calling APIs), then check the results and decide whether to continue.

This loop is designed to let agents act autonomously while giving you oversight and control.

Gathering context

- The SDK can perform agentic search on a file system using typical developer commands (grep, find, glob) to pull relevant files into context.

- You can also implement semantic search by embedding and querying vectors, but Anthropic recommends starting with agentic search and adding semantic search only if speed is critical.

- Subagents can run in parallel to collect specific information and send back only relevant snippets.

- The Files API allows agents to access documents up to 500 MB and store information beyond the immediate context window.

Taking action

- Tools are the primary way your agent acts. Built‑in tools include file operations (Read/Write/Grep/Glob), Bash for shell commands, web fetch for API calls, and multi‑language code execution.

- You design custom tools to extend the agent. Tools must have descriptive names, a schema for arguments, and a function that returns results. Tools are served via the Model Context Protocol (MCP) server; your agent can then call them as part of its loop. The SDK supports a wide range of pre-built tools for tasks such as file operations and executing shell commands. The Model Context Protocol (MCP) provides standardized integrations to external services.

Verification and repetition

- After each action, the agent can verify the output (e.g., run tests or linting, check file contents, or present results to a human for approval).

- Use permission modes to control autonomy: manual (default) requires approval for each action; acceptEdits auto‑approves file edits; acceptAll runs fully autonomously (recommended only in trusted environments).

- Verification combines rules-based checks, visual feedback, and optional LLM assistance in the SDK. Verification of work is essential for agents to self-correct and improve their outputs over time.

Step #3. Define the agent’s role and operational options

Use the SDK’s options object to set the agent’s role, working directory, allowed tools, and permission strategy. A typical configuration looks like this (Python example):

from claude_code_sdk import ClaudeCodeOptions

# Step 1: Define the agent's role

options = ClaudeCodeOptions(

system_prompt="You are a Python code reviewer focused on security",

cwd="/path/to/project"

)

# Step 2: Set operational parameters

options.allowed_tools = ["Read", "Grep"] # Tools the agent can use

options.permission_mode = "manual" # or "acceptEdits", "acceptAll"

options.max_turns = 5 # Limit number of iterations

This defines a “code reviewer” agent that works within a given directory and can only read or search files until a human approves further actions.

Step #4. Run the agent via the high‑level interface

Start interacting with your agent by calling the high‑level query function or by creating a persistent client:

import anyio

from claude_code_sdk import query, ClaudeSDKClient

# Option A: One‑off query (streaming):

async def run_query():

async for msg in query("Review main.py for security issues", options=options):

for block in msg.content:

if block.type == "text":

print(block.text)

elif block.type == "tool_use":

print(f"Using tool: {block.tool_name}")

# Option B: Persistent client for multi‑turn interactions:

async def run_client():

async with ClaudeSDKClient(options=options) as client:

await client.query("Find all SQL queries in the codebase")

async for response in client.receive_response():

# handle responses

pass

anyio.run(run_query)

The query API streams back messages that include either text responses or instructions to use tools.

Step #5. Add custom tools and subagents

To extend functionality beyond the built‑in tools, implement custom tools, and define subagents:

- Create custom tools using the @tool decorator. Each tool should describe its purpose and argument schema, then implement the logic. Tools are registered on an MCP server and appended to options.allowed_tools:

from claude_code_sdk import tool, create_sdk_mcp_server

@tool("security_scan", "Scan code for vulnerabilities", args_schema={"file": str})

async def security_scanner(args):

file_path = args["file"]

# ... custom scanning logic ...

return {"content": [{"type": "text", "text": f"Scanned {file_path}"}]}

server = create_sdk_mcp_server(

name="security-tools",

version="1.0.0",

tools=[security_scanner]

)

options.mcp_servers = {"security": server}

options.allowed_tools.append("mcp__security__security_scan")

- Define subagents by creating Markdown files in .claude/agents/ with YAML front‑matter specifying their role, allowed tools, and model. For example:

---

name: sql-expert

description: Analyzes and optimizes SQL queries

allowed_tools: ["Read", "Grep"]

model: claude-3-sonnet

---

You are an SQL optimization expert. Focus on query performance and security. The main agent will automatically delegate SQL-related tasks to this subagent.

These subagents run with isolated context windows and can be invoked automatically when the main agent encounters relevant tasks.

Step #6. Manage context and memory

- Automatic context compaction. The SDK summarizes older messages when near the context limit, ensuring long-running agents stay within the model’s context window.

- Persistent memory. The CLI and SDK look for CLAUDE.md or .claude/CLAUDE.md files to maintain persistent context across sessions.

- Agentic vs semantic search. Use file search (grep, glob) as your default; add semantic search (vector embeddings) only if your agent needs faster or more abstract retrieval.

Step #7. Apply best practices

- Start small. Build simple agents first, such as one-shot tasks or single-tool automation, before composing complex workflows.

- Use least-privilege permissions. Grant only the tools your agent truly needs and set permission_mode to manual or acceptEdits to ensure oversight.

- Design clear system prompts. Define the agent’s role and guardrails to align behavior. Use explicit instructions to prevent unintended actions.

- Monitor and iterate. Test your agent’s performance, adjust tools and prompts, and monitor sessions for errors or unexpected actions. Use hooks to prevent dangerous commands (e.g., block rm -rf in Bash).

- Explore extensions. Integrate external databases, APIs, or vector stores via MCP to give your agent domain‑specific abilities.

- Develop agents for diverse tasks. Agents can be developed for tasks including code reviews, customer support, financial analysis, and deep research.

By following these steps, you can create robust AI agents that operate autonomously yet safely using Anthropic’s Claude Agent SDK.

Create a Conversational AI Agent with Helply Today

If you’re looking to implement AI-powered customer support agents that reduce support costs and improve satisfaction, consider Helply.

Helply is a self-learning conversational AI support agent designed to plug into your help desk and knowledge base. It automatically resolves over 70% of Tier-1 customer inquiries instantly, 24/7.

It helps reduce ticket volume by enabling users to chat directly with the bot instead of searching through articles.

Key Features of Helply

Helply has the following features:

Training the AI Agent

Helply trains on diverse content types, including;

- Knowledge base articles (via integrations like Zendesk and Google Docs),

- Web links (by crawling content)

- Manually pasted text

- Uploaded files

- Custom Q&A pairs

This continuous enrichment ensures the agent stays up to date and knowledgeable.

2. Agent Configuration

Customize the agent’s look and feel, persona (friendly, plainspoken, playful, etc.), styling rules (such as bolding key words), and response style (short, crisp answers).

You can also define fallback behaviors for when the bot doesn’t know an answer, such as triggering a form or suggesting contacting support.

3. Actions Beyond Q&A

Helply can perform interactive and transactional tasks beyond answering questions, including;

Triggering emails, calling APIs (e.g., Stripe to retrieve invoices)

Executing product-specific workflows, like creating help articles.

Other features include:

4. Contacts & Personalization: The agent can leverage user-specific data (such as plan type or permissions) to tailor responses, providing personalized answers like “You’re on a Premium plan, so yes, you have this feature.”

5. Conversations Dashboard: Access past user conversations to gain insights, perform training, and conduct quality checks to continuously improve the agent’s performance.

6. Gap Finder: Helply analyzes support tickets to detect common questions the bot can’t yet answer, highlight knowledge gaps, and help you train the bot on these topics to improve future coverage.

7. Easy Deployment: Helply provides embed codes to add the agent to your website or help center, allowing users to chat with the bot wherever you deploy it.

Experience the power of AI-driven self-service support! Book a FREE demo today and see how conversational AI agents can transform your customer support.

LiveAgent AI vs Chatbase vs Helply: Features and Pricing Compared

LiveAgent vs Chatbase vs Helply: Compare features, pricing, and pros/cons. See which AI support tool fits your team. Click here to learn more!

Build AI Agents with Kimi K2.5: Tools, Swarms, and Workflows

Build AI agents with Kimi K2.5 using tools, coding with vision, and agent swarms. Learn best modes, guardrails, and recipes to ship reliable agents.

We guarantee a 65% AI resolution rate in 90 days, or you pay nothing.

End-to-end support conversations resolved by an AI support agent that takes real actions, not just answers questions.